Resources — SMART preprints

Version: last updated on 30 August 2024

On this page, you can find information about:

- Structured expert elicitation with the IDEA protocol

- Logging on (includes platform demonstration video)

- Answering the questions

- The repliCATS Code of Conduct

- Downloadable Guide

Structured expert elicitation with the IDEA protocol

The repliCATS approach is based on a type of Delphi protocol called IDEA.

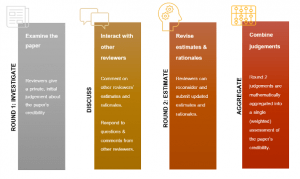

IDEA stands for “Investigate”, “Discuss”, “Estimate” and “Aggregate”, the four steps in this structured elicitation process.

As used in the repliCATS project, the IDEA protocol asks participants/reviewers to:

- Independently Investigate the paper, providing their personal judgement on multiple credibility dimensions of the central claim, and commenting on their thinking. Reviewers will be asked to give a quantitative assessment for each dimension and – for some questions – indicate their confidence using lower and upper bound estimates.

- Engage in an online or in-person synchronous Discussion with other reviewers of the same paper. Upon submitting their initial judgements, reviewers will be able to see the anonymised estimates as well as the aggregated judgement across all reviewers. At this point, reviewers can also comment on others’ assessments and their written rationales. This phase is intended to help resolve uncertainties and allow reviewers to investigate the strength of the evidence for and against certain judgements.

- Submit a revised quantitative Estimate for each of the credibility dimensions and describe how their thinking has changed.

The repliCATS team will compute an Aggregate of the group judgements which comprises the final assessment of the paper’s overall credibility.

More information on the IDEA protocol can be found here.

The repliCATS platform

Logging on

We have created accounts for new users, return users can login using their username and password. First time users, check your emails for login details.

Once your account is activated (this should be immediate), your username will be your email address. On the platform, you will be identified only by your avatar (e.g., Echidna_231).

Here is a short platform demonstration video that outlines the features of the platform. Please remember the platform works best on Google Chrome.

Answering the questions

You will be asked to provide a quantitative estimate on 7 credibility dimensions, using either a 0-100 point estimate, or a probability estimate with a lower and upper bound. Please outline your reasoning for each of the judgments in the comments box for Q7: Calibration. Use the question navigation panel to skip between entering estimates for the various questions and the Q7 text box.

In the video below, Prof Fiona Fidler (co-CI for the project) introduces the SMART Preprints project, the repliCATS methodology and provides you with an outline of the seven questions you need to answer for each preprint. You can also access comprehensive guidance for each question in the section below.

The 7 credibility dimensions

Click on the credibility dimensions below to learn more about the relevant review question, its purpose and to access detailed guidance on how to answer it.

Question 1: How well do you understand this claim?

Purpose: To understand if anything affects your ability to interpret the specific claim.

Clarification: We know that scientific papers vary in clarity and comprehensibility. It’s possible that a paper:

- is vague;

- is poorly written;

- relies on an unfamiliar procedure;

- contains too much jargon;

- is unclear about exactly what claim is or how it is interpreted;

- is about a concept that you are not familiar with and/or have difficulty conceptualising.

These factors can all contribute to your ability to be able to interpret the claim and may in turn lead to different interpretations by the group. There is a comments box below this question, where you can restate the specific claim in your own words. If there are particular terms or concepts that you have difficulty interpreting, you can list them in this text box as well. Please do not put any discussion of your assessment of the claim in this box. Put all of your reasoning in the final text box, below Question 7: Calibration.

Answering the Comprehensibility question:

We’re asking for this on a scale of 0 to 100. 0 means that you have no idea what the claim means, 100 means it’s perfectly clear to you. This is not an objective measurement – try your best to estimate a number for comprehensibility, and just try to be consistent between papers.

Some papers may be outside of your main fields or use words that you are unfamiliar with. This might cause you to immediately put ‘I have no idea what the claim means’. However, with a little bit of effort, you can usually deduce what is being asked. If after your reading of the paper you still cannot work it out then you should definitely indicate this to us and consider whether that is indicating something about the quality of the research being described.

Tooltip: We know the clarity and comprehensibility of scientific papers varies. We are interested in your honest account of how well you understand this paper and its central claim.

Comments box: We have provided a comment box below the question so you can try to rephrase what you think the claim is about. This will be useful in the Discussion phase to prompt your memory about your initial interpretation of the question and to understand other participants’ interpretations. Please do not put any discussion of your assessment of the claim in this box.

The placeholder text for this textbox is: The clarity and comprehensibility of scientific papers varies. Tell us how well you understand this claim.

Question 2: How consistent is this claim with your existing beliefs?

Purpose: To capture your assessment of the prior plausibility of the claim.

Clarification: Sometimes we hear a claim in a paper and have a strong feeling that it is or is not very plausible either within the context of the experimental design, or more broadly (i.e. relating to a relationship that would generalize across contexts or experimental designs).

These prior beliefs can be useful. We’ve included this question here to allow you to state your prior belief about whether the claim describes a real effect, without considering the design of or the evidence from this particular study.

Don’t spend too much time on this question. In following questions, you will examine the evidence this particular study provides for the claim more critically.

Answering the Plausibility question:

We’re asking for this on a scale of 0 to 100. 0 means that the claim is exactly contrary to your pre-existing beliefs, 100 means it’s perfectly compatible with them. As with Question 1: Comprehensibility, estimate a number and just try to be consistent between papers.

The word ‘plausible’ means different things to different people. For some people, almost everything is ‘plausible’, while other people have a stricter interpretation. Don’t be too focused on the precise meaning of ‘plausible’ – you could also consider words like ‘possible’ or ‘realistic’ here. Try to let us know if some claims are clearly more plausible (or implausible) than others.

Tooltip: How likely would you have been to accept this claim before you considered the evidence from this paper?

Question 3: What is the probability that an independent close replication of this claim would find results consistent with the original paper?

Purpose: The question is asking about a close replication of the specific claim.

Clarification: A close replication is a new experiment that follows the methods of the original study with a high degree of similarity, varying only aspects where there is a high degree of confidence that they are not relevant to the research claim. People often use the term direct replication – however, no replication is perfectly direct, and decisions always need to be made about how to perform replications. To answer this question, you may want to imagine what kinds of decisions you would face if you were asked to replicate this claim, and to consider the effects of making different choices for these decisions.

For this purpose, we consider “consistent with the original paper” to mean a statistically significant effect (defined with an alpha of 0.05) in the same direction as the original study, using the same statistical technique as the original study.

Answering the Replicability question:

In this question, we want you to try and think of reasons why the specific claim may or may not replicate. We understand that your thoughts about the prior plausibility of this claim are likely to influence your judgement regarding this question. However, we’d like you to try and think more critically of other reasons why this particular study may (or may not) replicate, independently of whether the described effect is a real one.

For close replications involving new data collection, we would like you to imagine 100 (hypothetical) new replications of the original study, combined to produce a single, overall replication estimate (i.e., a good-faith meta-analysis with no publication bias). Assume that all such studies have both a sample size that is at least as large as the original study and high power (90% power to detect an effect 50-75% of the original effect size with alpha=0.05, two-sided).

Sometimes it is clear that a close replication of a claim involving new data collection is impossible, or infeasible. In these cases, you should think of data analytic replications, in which the central claim is tested against another pre-existing dataset that provides a fair test. Again, imagine 100 datasets analysed with results are combined to produce a single, overall replication estimate.

There are many things you might consider when making your judgement. The IDEA protocol operates well when a diversity of approaches is combined. There is no single ‘correct’ checklist of things to assess. However, some things you may wish to consider include statistical details, experimental design and contextual information about how the study was undertaken.

For replicability, we ask you to provide a lower bound, upper bound and best estimate. For further details see: The three-step response format.

Tooltip: Assume replications are competent and done in good faith. A close replication is a high-powered collection of new data to test an original study, without altering important features of the study design. Sometimes no such attempt is feasible. In these cases, replications will test claims with matching analyses on similar, pre-existing datasets. Check training materials for details on close replications and data-analytic replications, including how we define “consistent with the original paper” and high statistical power.

Question 4: What is the probability that an independent analysis of the same data would find results consistent with the original claim?

Purpose: To capture your beliefs about the analytic robustness of the claim.

Clarification: The term robustness is used here to represent the stability and reliability of a research finding, given the data. Is the claim likely to be due to an idiosyncratic choice of analytic strategy or not?

Answering the Robustness question:

It might help to think about it in this way: imagine 100 analysts received the original data and devised their own means of investigating the claim (e.g using a different, but appropriate statistical model or technique), how many would find a statistically significant effect in the same direction as the original finding? You should assume that any such analyses are conducted competently and in good faith.

Like Q3: Replicability, we ask you to provide a lower bound, upper bound and best estimate. For further details see: The three-step response format.

Tooltip: Assume all potential analyses are competent and done in good faith. Check training materials for details on how to assess “consistent with the original paper”.

This question has two parts, asking you to give an overall rating, and identify the features of the study that affect its generalizability.

Question 5a: Going beyond close replications and reanalyses, how well would this claim generalize to other ways of researching the question?

Purpose: The question is asking about whether the specific claim would hold up or not under different ways of studying the question.

Clarification: This question is asking about generalizations of the original study or ‘conceptual replications’. We want you to consider generalizations across all relevant features, such as the particular instruments or measures used, the sample or population studied, and the time and place of the study.

Answering the Generalizability (Rating) question:

This question is asked on a scale of 0 to 100, where 0 means the claim is not at all generalizable and 100 means it is completely generalizable.

We know that there are many different features of a given study that potentially limit generalizability, and they may have different levels of concern, so it might be tricky to work out a single rating across all of them. Assess this in whatever way seems best.

You might want to imagine 100 (hypothetical) conceptual replications of the original study, each of which varies just one specific aspect of the study design (e.g. sample, location, operationalisation of a variable of interest,…) while holding everything else constant. Across this hypothetical set of conceptual replications, all relevant aspects of the study are varied in differing ways. In your estimate, how many of these would produce a finding that is consistent with the original study?

Tooltip: We’re asking you to judge whether the results of this claim are due to an idiosyncratic feature of this study, such as the specific sample, setting, time, or the particular instruments or measurements used. How well do you think the result would hold up beyond those limits?

Question 5b: Please select the feature(s), if any, that you think limit the generalizability of the findings.

Purpose: The question is asking you to list the features of the study that raise substantial generalizability concerns.

Clarification: Select the features for which you definitely have generalizability concerns. Don’t select a feature if you simply think that it is possible that the study will not generalize over that feature.

Answering the Generalizability (Features) question:

You can select more than one feature – select all features you think raise substantial generalizability concerns.

If there is a feature that you think raises substantial generalizability concerns, but we have not included then select ‘Other’ and briefly list the feature(s) in the text box. Please do not use this text box to discuss why you think there are generalizability concerns or to give specific details about a feature you have selected. If you have any comments or thoughts on this, please write them in the final text box, below Question 7: Calibration.

Tooltip: There are many features of any dataset. A claim might generalize well over some but fail to generalize over others. We want you to list the features that may significantly limit the generalizability of this claim. Do not discuss the reasons for your concerns about generalizability in this text box. Do that in the text box below.

Question 6: How appropriate is the statistical test of this claim?

Purpose: The question is asking about the extent to which a set of statistical inferences, and their underlying assumptions, are appropriate and justified, given the research hypotheses and the (type of) data.

Clarification: This question focuses on the extent to which the statistical models/tests are appropriate for testing the research hypotheses. For instance, assumptions may have been violated that would render the chosen test(s) inappropriate, or the statistical model of choice may not be appropriate for the type of data.

Answering the Statistical Validity question:

We’re asking for this on a scale of 0 to 100. 0 means that the statistical analyses are not at all appropriate to test the research hypotheses, 100 means they are entirely appropriate to test the research hypotheses. Again, try to estimate a number and be consistent in the criteria you apply when assessing statistical validity between papers.

Tooltip: By “appropriate” we mean that the correct test for the design is done, precautions are taken to minimize risk of bias and error, and assumptions are reasonable.

Question 7: How reasonable and well-calibrated are the conclusions drawn from the findings in this paper?

Purpose: The question is asking about the extent to which the conclusions drawn from this claim are warranted.

Clarification: This question relates to the stated interpretation of the specific claim, whether the conclusions match the evidence presented, and the limitations of the study. Sometimes a paper’s conclusion(s) might extend beyond what is indicated by the results reported.

Answering the Calibration question:

This question uses the same 3-point format as Question 3: Replicability and you should assess it in the same way. For further details see: The three-step response format.

Tooltip: By “well-calibrated” we mean that the conclusions match the evidence presented, without overstepping the mark.

Comments box: We have provided a text box below the question. Please use the text box for this Question 7: Calibration to capture all of your thinking about your assessment of this paper. For further details see Providing a rationale.

Providing a rationale

Please use the text box below Question 7: Calibration to capture all of your thinking about your assessment of this paper. Note that you can navigate to this text box – at any stage, so you can drop comments in as you go. You don’t have to wait until you get to this question before writing your thoughts.

Collecting all of your reasoning in this one spot allows us to understand how you assessed the claim, which factors were important and which were less influential in determining your judgements. This information may also prove very useful during the discussion phase.

Please write your rationale in complete sentences and in a tone appropriate to it being seen by the author of the paper. Note that you are not writing a full peer-review of the paper here – you only need to comment on the specific claim that you are assessing. However, you should write in a similar tone as if it were a peer review.

You do not need to confine yourself to the questions that we have asked. However, if you are commenting on a specific Credibility Dimension (e.g. Generalizability or Calibration) then please indicate this with a subheading. You are welcome to comment on aspects of the paper beyond the specific claim, if you wish.

In Round 2, please make sure you provide your final thoughts about your assessment. You can copy your response from Round 1 as a start but make sure you describe any changes to your thinking and why, especially if it relates to the Discussion phase.

Please do not use your own name or the name of any other participants, or any other distinguishing remark such as your affiliation or your professional position. Judgements will eventually be made public and we must be able to keep them anonymous. If you want to refer to yourself or a team member, please only use the anonymised screen names.

The placeholder text for this textbox is: Use this textbox for all comments about your assessment of this claim, including the specific questions above, such as the plausibility of the claim, its generalizability, or its interpretation. You don’t need to confine your comments to the questions that we have asked, but if you are commenting on a specific question, please indicate that with a subheading. Please write in full sentences and in a tone appropriate to the author seeing this comment.

The three-step response format

Questions 3, 4 and 7 ask you to provide three separate estimates: a lower bound, upper bound and best estimate. There is good evidence that asking you to consider your lower and upper bounds before making your best estimate improves the accuracy of your best estimate.

We will illustrate this approach using Question 3: Replicability as an example. Think about your assessments of Question 4: Robustness and Question 7: Calibration in a similar manner.

- First, consider all the possible reasons why a claim is unlikely to successfully replicate. Use these to provide your estimate of the lowest probability of replication.

- Second, consider the possible reasons why a claim is likely to successfully replicate. Use these to provide an estimate of the highest probability of replication.

- Third, consider the balance of evidence. Provide your best estimate of the probability that a study will successfully replicate.

Some things to consider about the three-step format:

- Providing a lower estimate of 0 means you believe a study would never successfully replicate, not even by chance (i.e. you are certain it will not replicate). Providing an upper estimate of 100 means you believe a study would never fail to replicate, not even by chance (i.e. you are certain it will replicate).

- Answers above 50 indicate a belief that the study would be more likely to replicate than not. Answers below 50 indicate a belief that the study would be more likely to not replicate. Providing an estimate of 50 means that you believe the weight of evidence is such that it is just as likely as not that a study will successfully replicate.

- Use the width of the interval between your upper and lower estimates to reflect your level of uncertainty about your assessments. Assessments that you are more uncertain about should have wider intervals. Intervals extending above and below 50 indicate that you believe there are reasons both for and against the study replicating.

Getting the most out of group discussion

Once you have submitted your Round 1 estimates, you will be returned to the platform home page, where the paper should now have the “Round 2” tag. If you click on the paper again, you will be able to view estimates and comments made by other participants in your group. If you are the first to complete your Round 1 assessment, only your results will show and you may have to check back later.

After all members of the group have completed Round 1, you will have the Discussion phase. This is a key component of the IDEA protocol. It provides an opportunity to resolve differences in interpretation, and to share and examine the evidence.

In the interest of time and efficiency, the facilitator will focus the group discussion on those questions where opinions within the group diverged the most. However, the purpose of the discussion is not to reach a consensus, but to investigate the underlying reasons for these (divergent) estimates. By sharing information in this way, people can reconsider their judgements in light of any new evidence and/or diverging opinions, and the underlying reasons for those opinions.

Here are some tips and ground rules for the Discussion phase.

We encourage you to review others’ assessments and – in particular – examine any comments in the final text box below Question 7: Calibration, because this is where assessors capture their thoughts and reasoning on the various dimensions of the paper’s credibility. You can use the comments feature on the platform to react to other participants’ judgements, interrogate their reasoning, and ask questions.

Once again, it is important that you do not use any participant names in comments on the platform, not even your own. Comments will eventually be made public, and we need to keep these anonymous. If you want to refer to other participants, please use the anonymous user name (avatar) they have been assigned e.g. Koala11.

Some ground rules for the Discussion phase, regardless of whether you are leaving comments on the online platform or discussing directly:

- Respect that the group is composed of a diversity of individuals;

- Consider all perspectives in the group. In synchronous discussion, allow an opportunity for everyone to speak;

- Don’t assume everyone has read the same papers or has your skills – explain your reasoning in plain language;

- If someone questions your reasons, they are usually trying to increase their own understanding. Try to provide simple and clear justifications;

- Try to be open-minded about new ideas and evidence.

The following list may be useful to consider when reviewing and commenting on judgements. You do not have to work through these systematically, but consider which may be relevant:

- What did people believe the claim being made was? Was the paper clear? Did everyone understand the information and terms in the same way? If interpretations of the central claim (or any claim) vary, instead of trying to resolve this, focus on discussing what that means for the credibility of the paper.

- Consider the range of estimates in the group and ask questions about extreme values. What would cause someone to provide a high/low estimate for this question?

- Very wide intervals suggest unconfident responses. Are these based on uncertainties of interpretation or are these participants aware of contradictory evidence?

- Very narrow intervals suggest very confident responses. Do those participants have extra information?

- It’s ok if you don’t have good evidence for your beliefs – please feel free to state this.

- If you have changed your mind since your Round 1 estimates it’s good to share this. Actively entertaining counterfactual evidence and beliefs improves your judgements.

- If you disagree with the group that is fine. Please state your true belief when completing Round 2 estimates. This represents the true uncertainty regarding the question, and it should be captured.

- Consider raising counterarguments, not to be a nuisance, but to make sure the group considers the full range of evidence.

- As a group, avoid getting bogged down in details that are not crucial to answering the questions, or in trying to resolve differences in interpretation. Focus on sharing your reasons, not on convincing others of specific question answers.

Updating assessments & reasoning (Round 2)

You can go into Round 2 as soon as you have finished Round 1, to review what others have said about the paper and its claims. Based on that, you can start entering Round 2 judgements.

The Discussion will provide additional opportunities to examine and clarify the reasons for the variation in judgements within your group. You can continue to update your Round 2 judgements as you and your group go through and discuss the paper. You can also come back to a paper after you’ve had some time to think about it. Claims will remain open for the duration of the workshop. Let the repliCATS team know if you might need some more time.

Please make sure you use the text box below Question 7: Calibration to provide your final thoughts about your assessment. You can copy your response from Round 1 but make sure you describe any changes to your thinking and why, especially if it relates to the Discussion phase. However, remember to keep all comments anonymous and do not refer to any of your group by name.

Whether or not you want to update your estimates is entirely up to you. In some instances, your views and opinions might not have shifted after discussion, but perhaps not. Either decision is absolutely fine and provides useful information. Make sure you hit the submit button to log your Round 2 estimates, even if your assessments have not changed. Remember, your Round 2 estimates are private judgements, just like your Round 1 estimates, so there is no need to worry about what others might do or think about your judgements, and whether or not you have changed your mind.

The repliCATS Code of Conduct

We encourage all reviewers to read and adhere to the repliCATS Code of Conduct: https://osf.io/a5ud4/

Downloadable Guide

A pdf version of this guide, if you would like it, can be downloaded here: https://osf.io/469zd.